I have written before about storage for my homelab. I have a NAS; and then for the VMware cluster, I had USB 3.0 attached 3.5″ hard drive bays. The hard drive bays shared a single USB 3.0 5 gbps connection. And being that storage has come down in price, these were SATA SSDs. Having (at the time) 4 SATA SSDs sharing a single USB 3.0 connection was not ideal; not only because of the single pipe, but because of the overhead of USB. When the vSAN these disks hit any more than idling IOPS number, latency would go through the roof. That was the main item I was attempting to correct.

Having used “disk shelves” before at work, I thought I would try to make a compact version for my homelab. I figured, all I need is an away to connect the SSDs over external SAS, an eSAS HBA, and some power. This project ended up going on for far too long and ending with a much simpler solution.

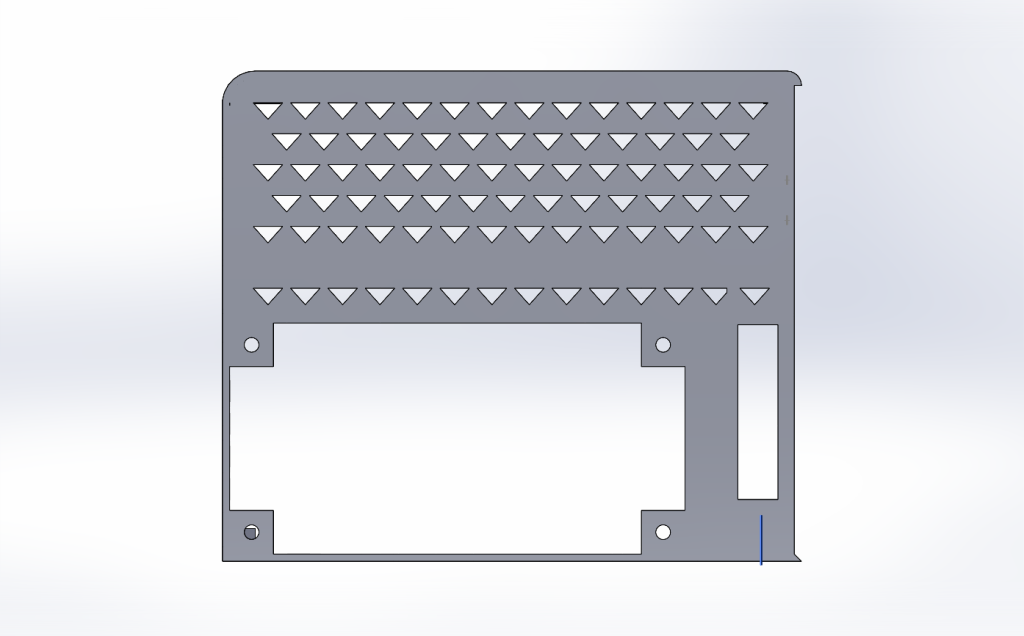

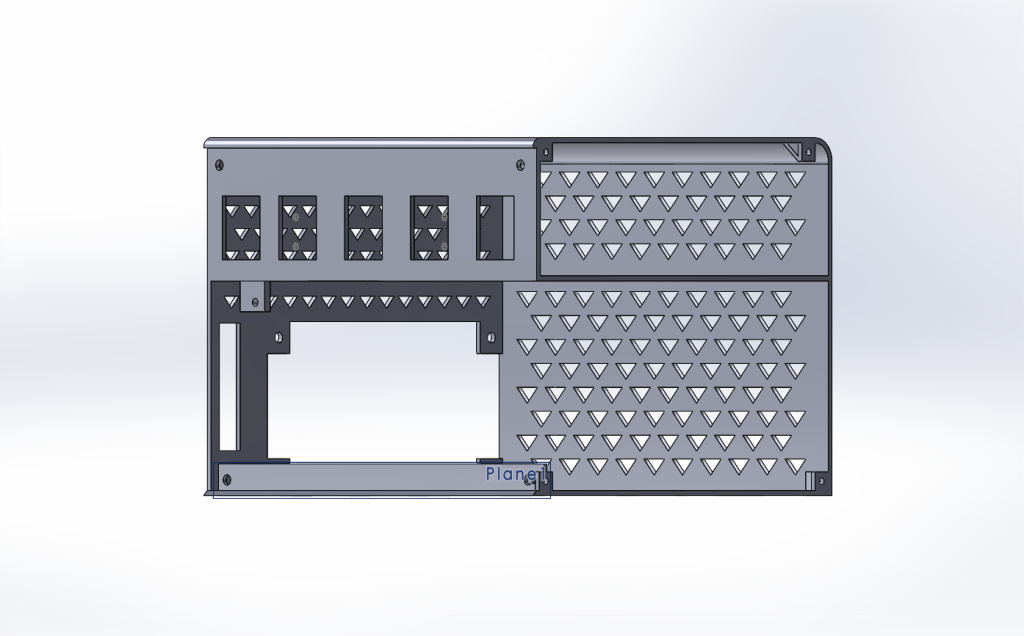

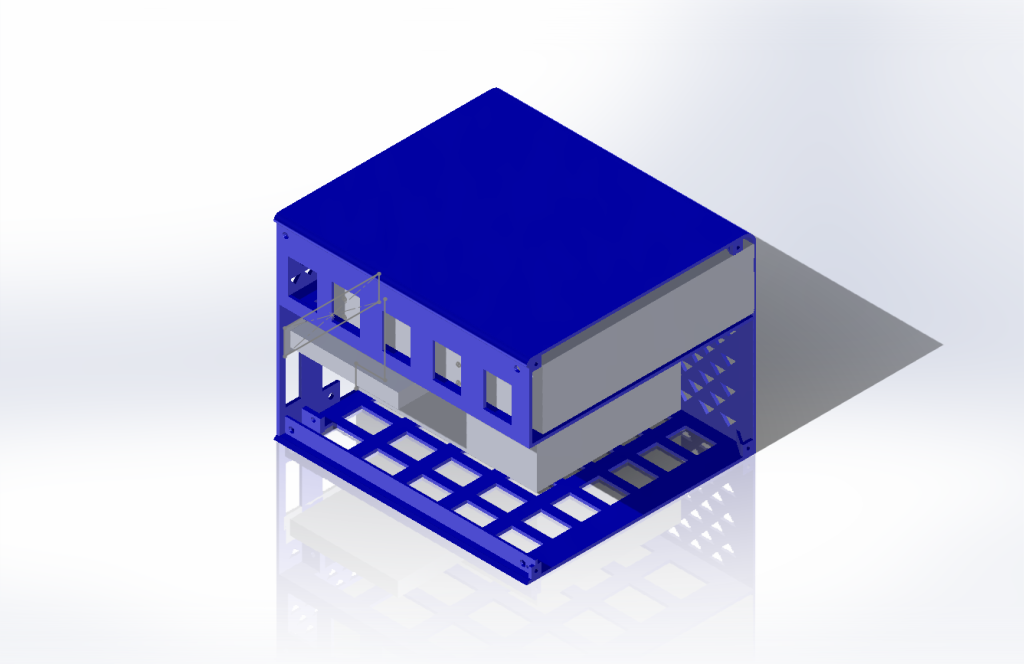

I started where any good project does, finding the general parts I will use for the project. I came across this adapter. It allows you to put 6, 2.5″ drives into a single 5.25″ DVD bay. Each drive gets its own SATA connection, and it even has fans on the back to cool them. I started designing the case around that. Then I found this little adapter to go from 2 internal SAS cables to external SAS. My thought was externally I would have eSAS into my “server”, and then convert that SAS to 4 SATA connections each.

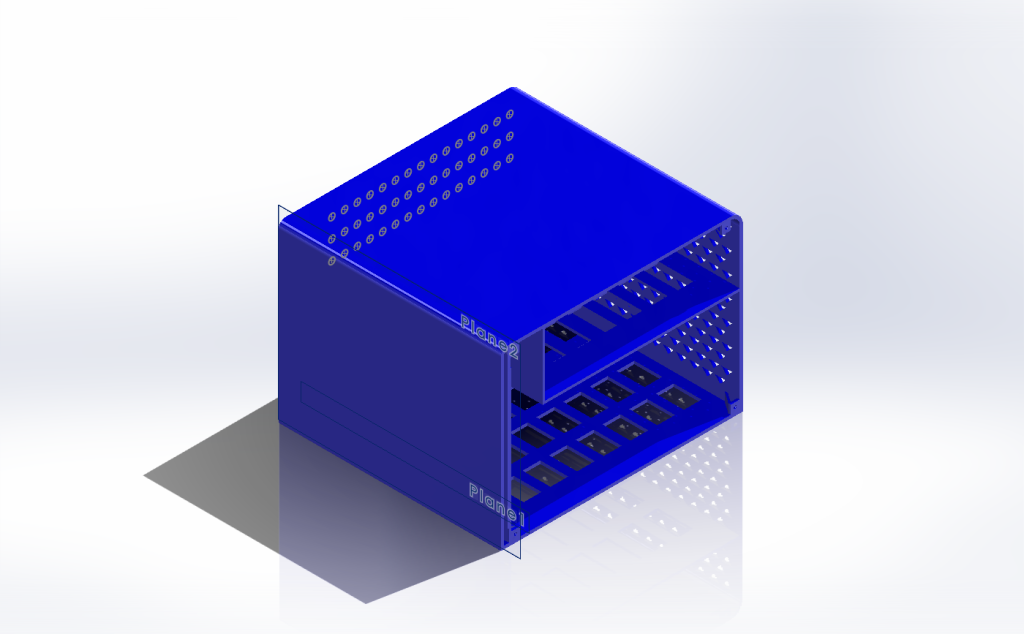

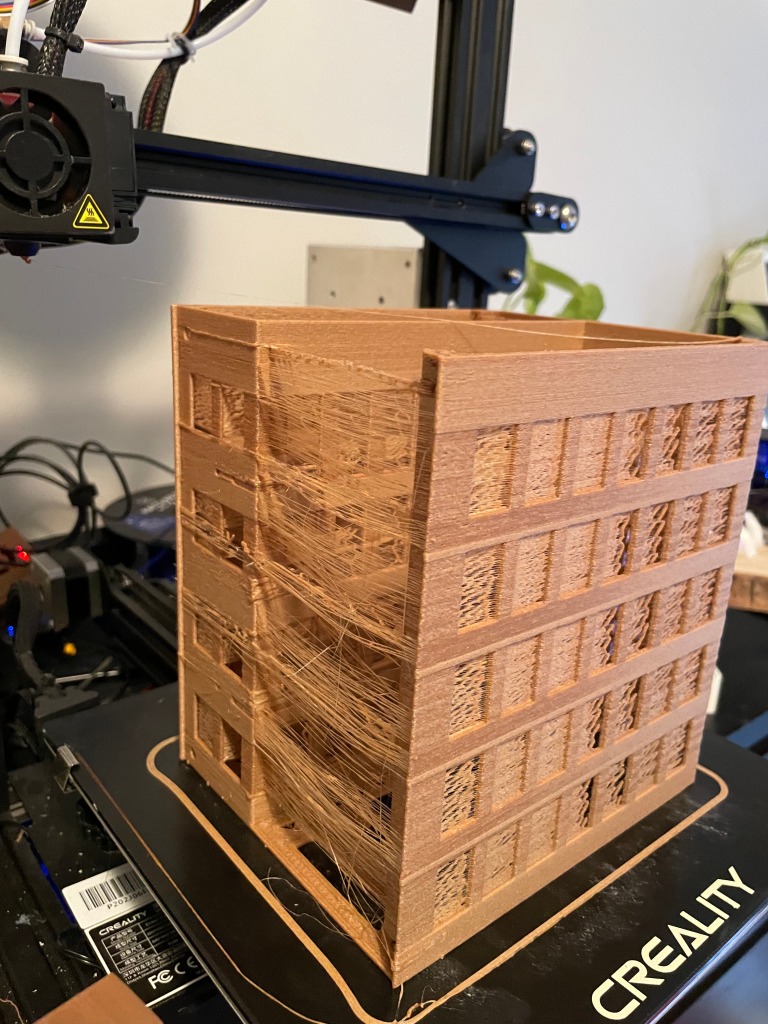

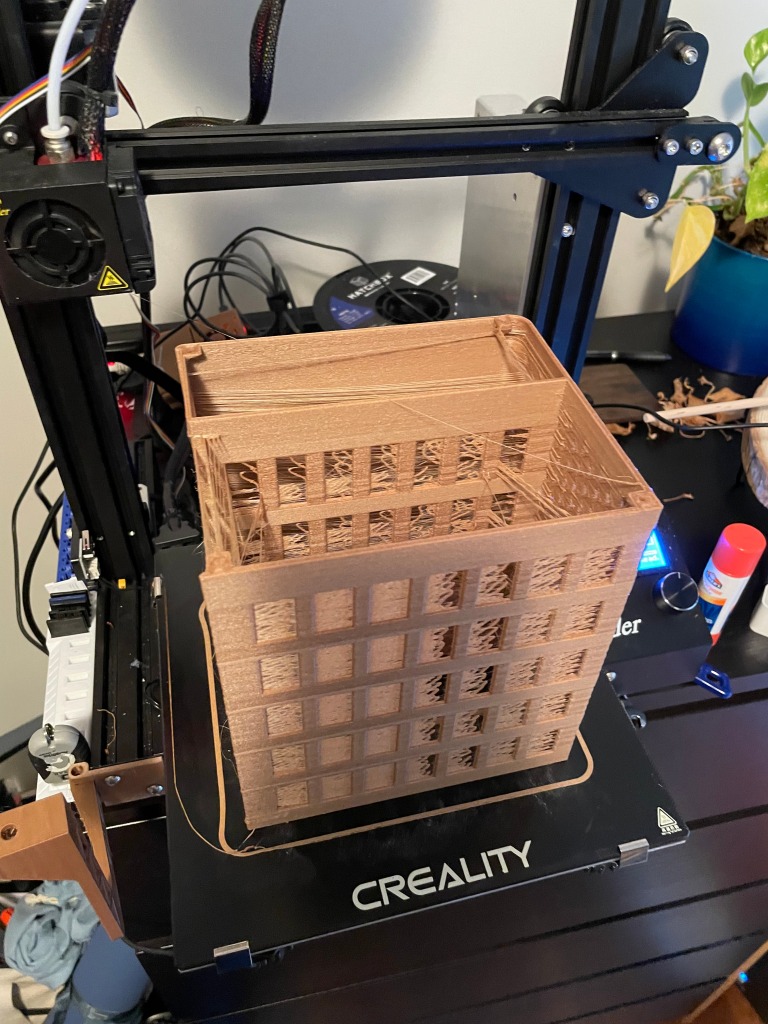

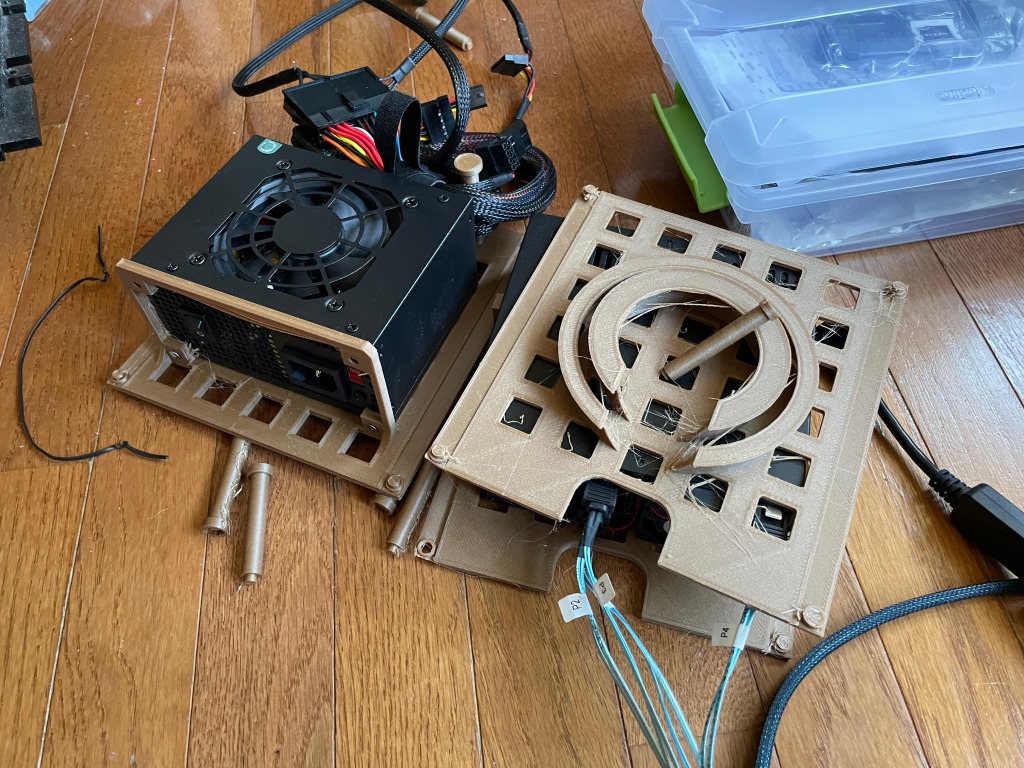

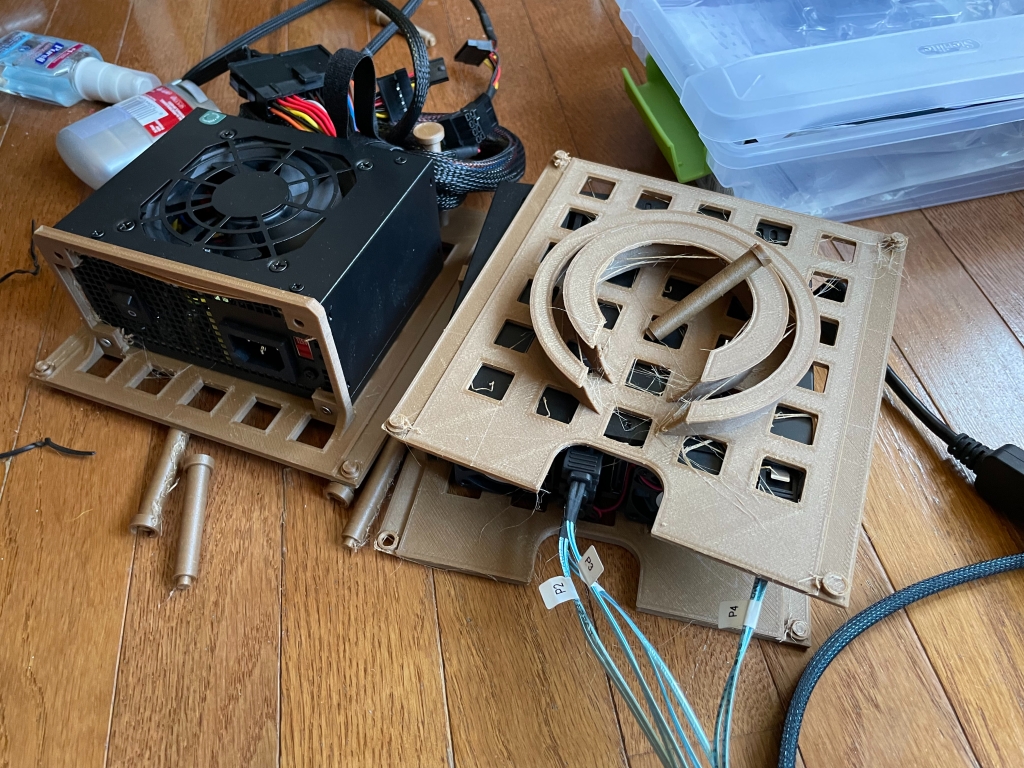

Now I needed to start creating a case to 3D print. Every other eSAS enclosure I found online was HUGE, I wanted something small that could fit the power supply, and the connections I needed. This went through many… many… iterations.

Some of the prints didn’t come out great; I spent some time getting the printer dialed in.

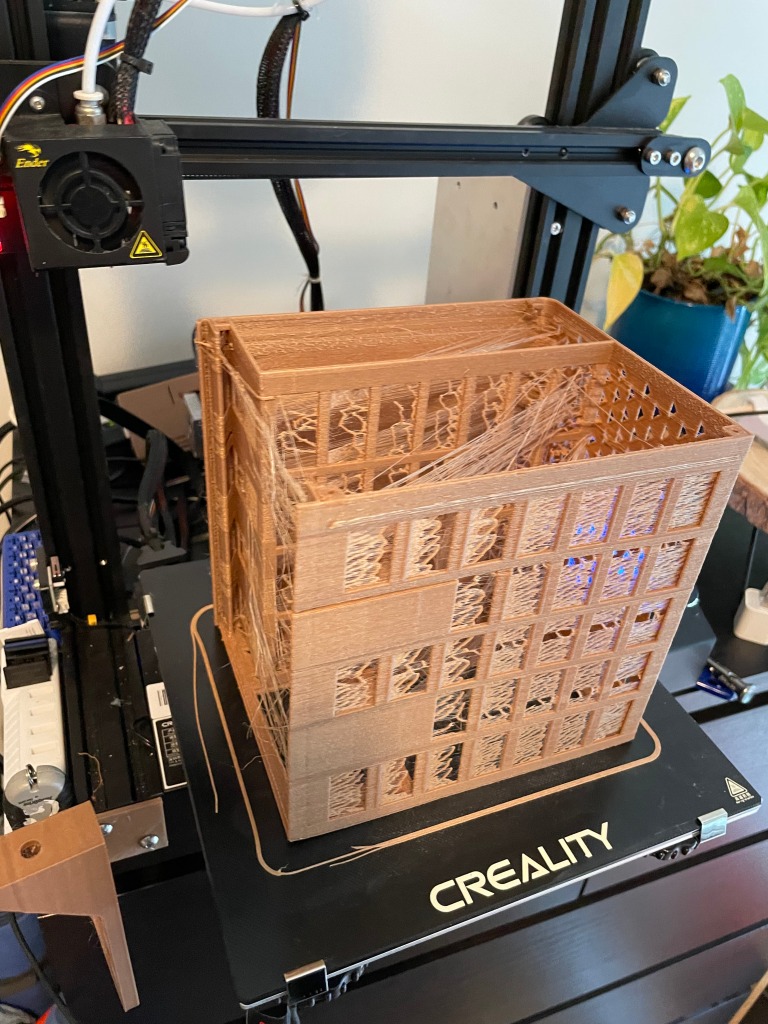

This was a bad path I went down; I was hoping to cut down the plastic and thought I could have levels and it stand on columns, this turned into much more of a mess (and hard to get to stay in the right position) than waiting for the bit prints to just finish.

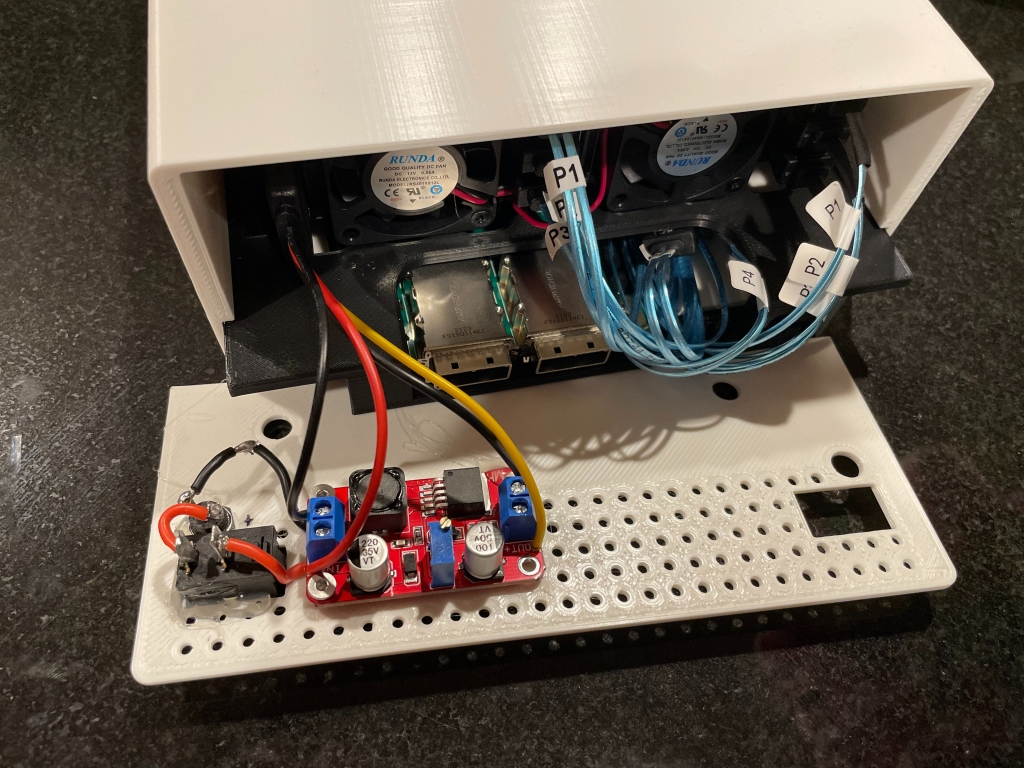

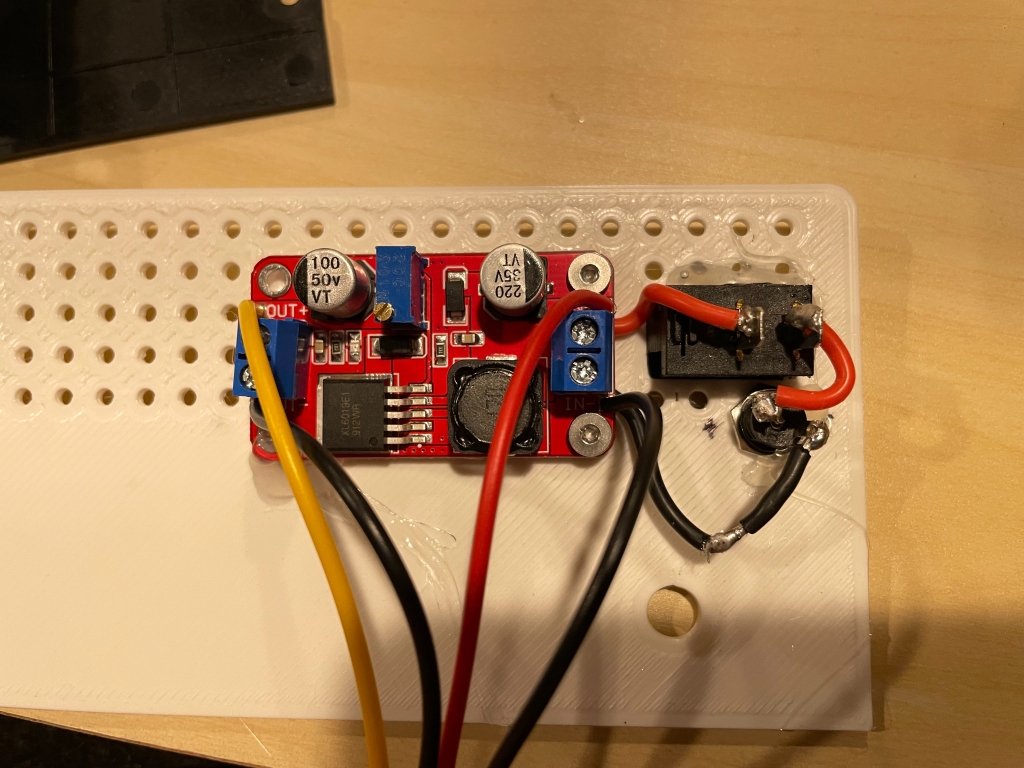

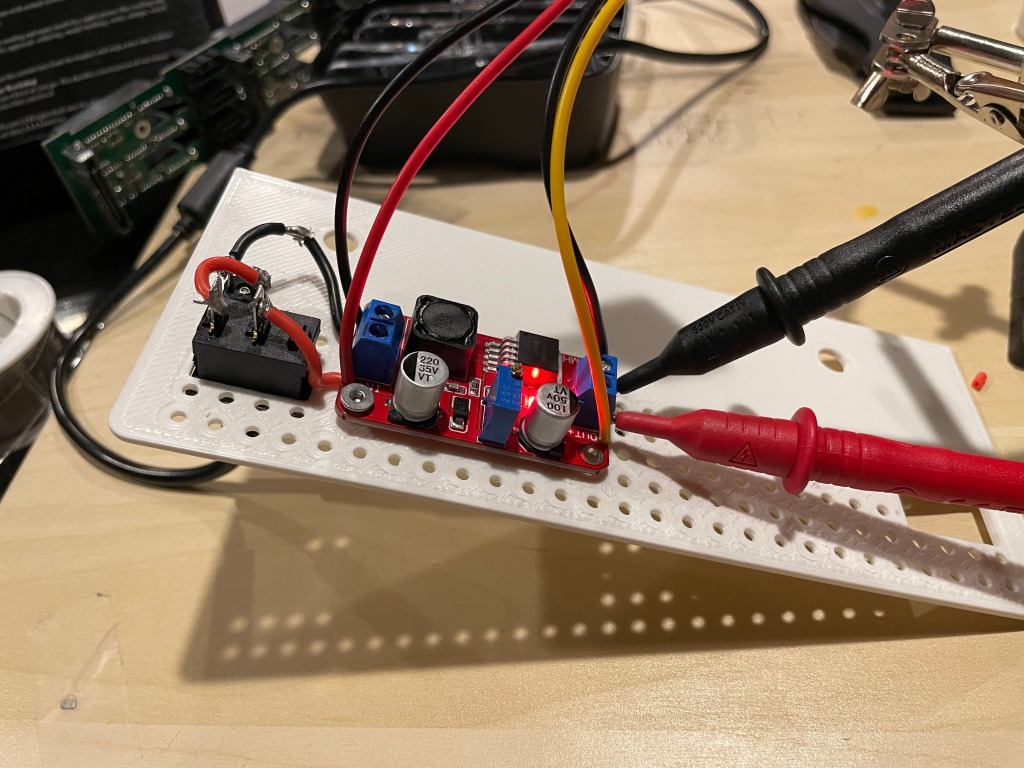

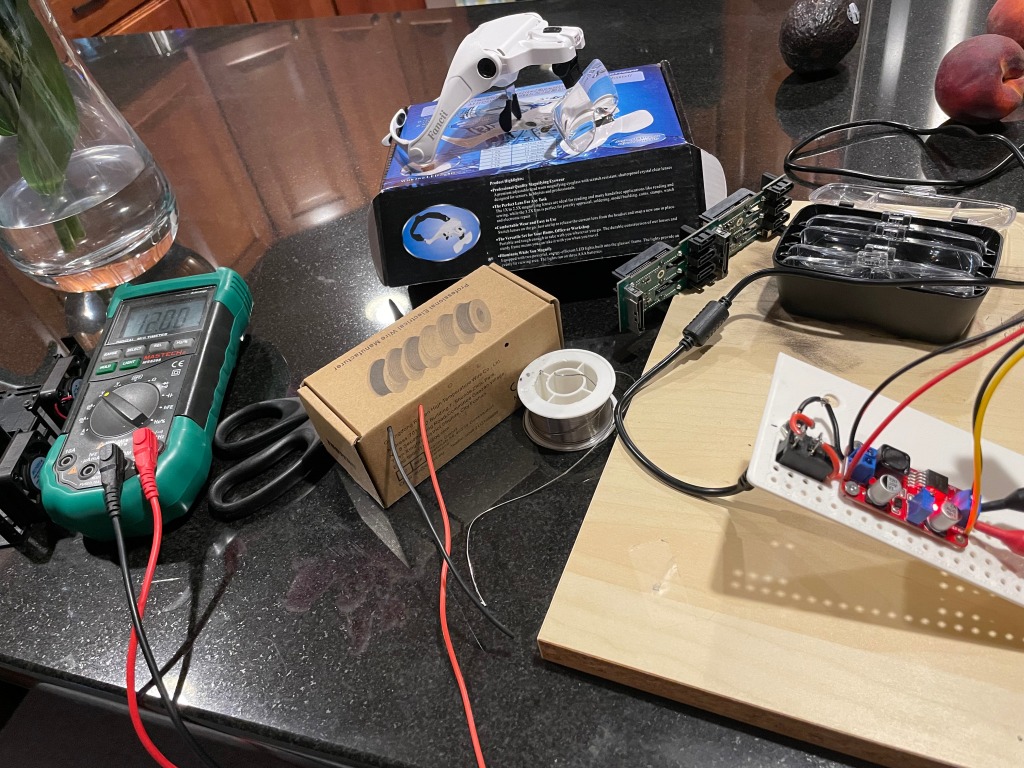

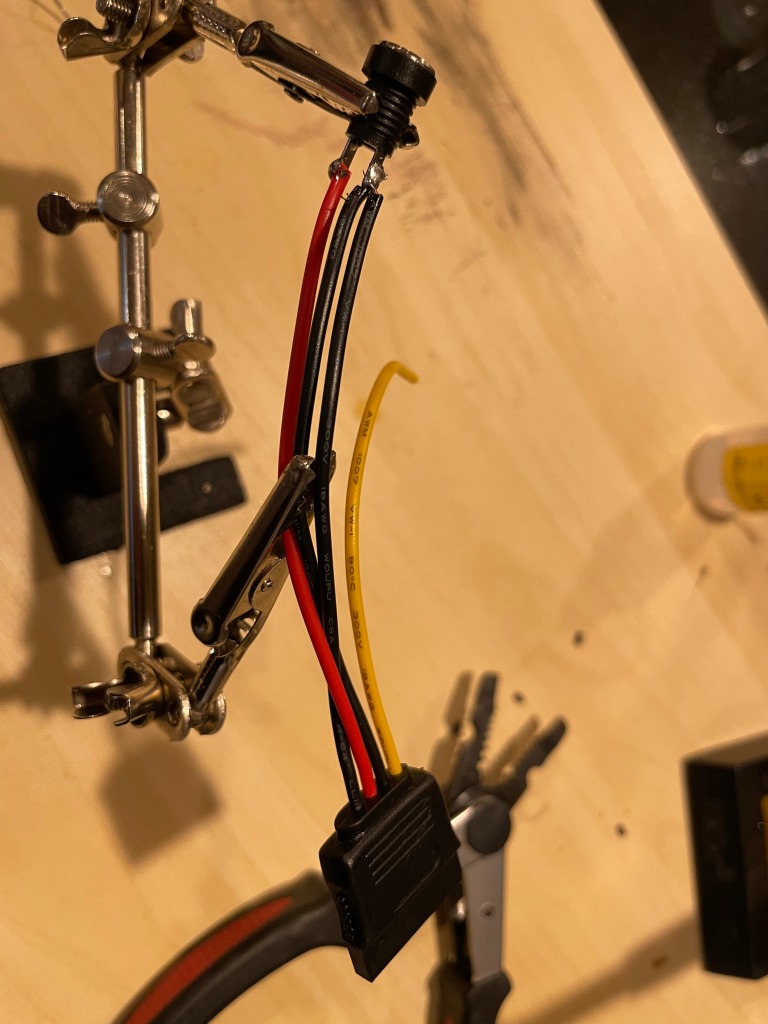

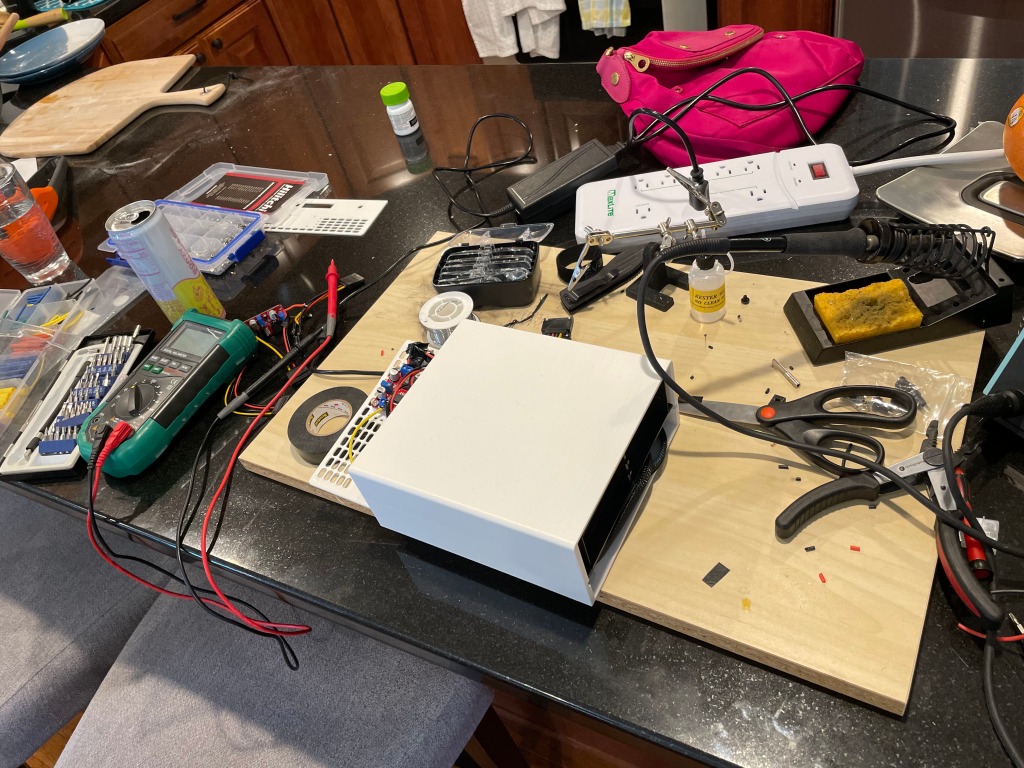

Next I had to figure out power. Each drive I had can pull up to 1.5 amps at 5 volts. This means I need 9 amps on 5 volts. That is a good amount of power on one rail. I thought I could use a standard PC power supply, with a cable to turn it on with a switch. These PSUs were big and made the design a bit bulkier. The next idea was to just use a wall power supply, a 5 volt one with enough amps. Also, I planned to only use the 4 drives per unit I had, so at least at first, I could cut the amp requirement down.

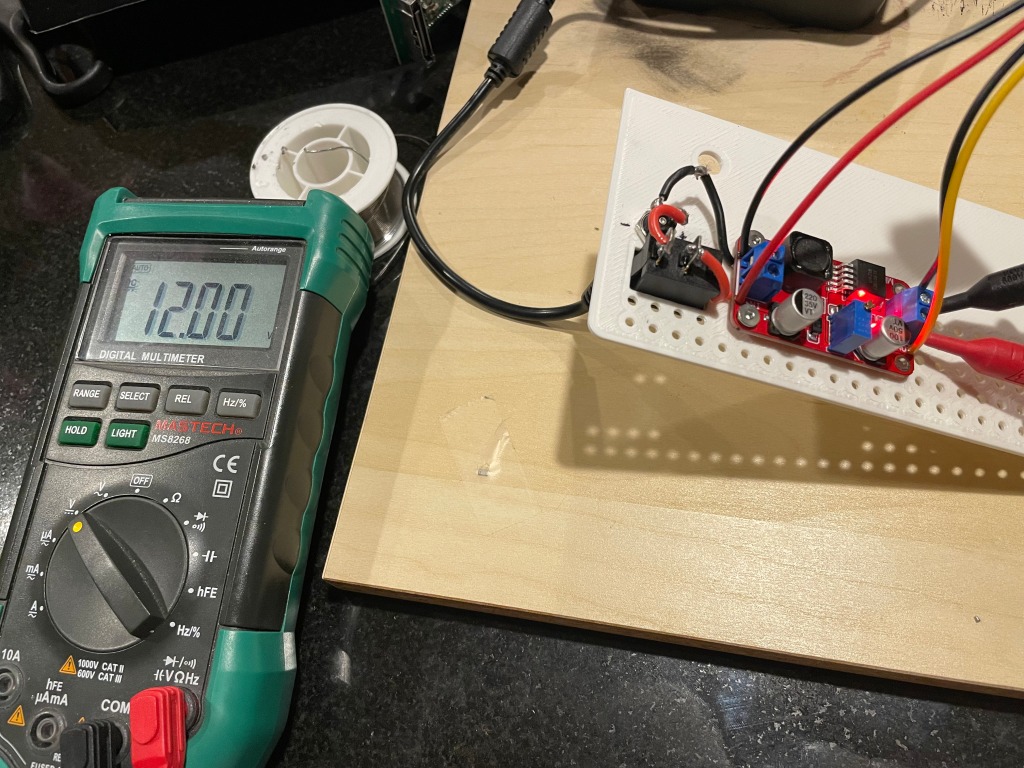

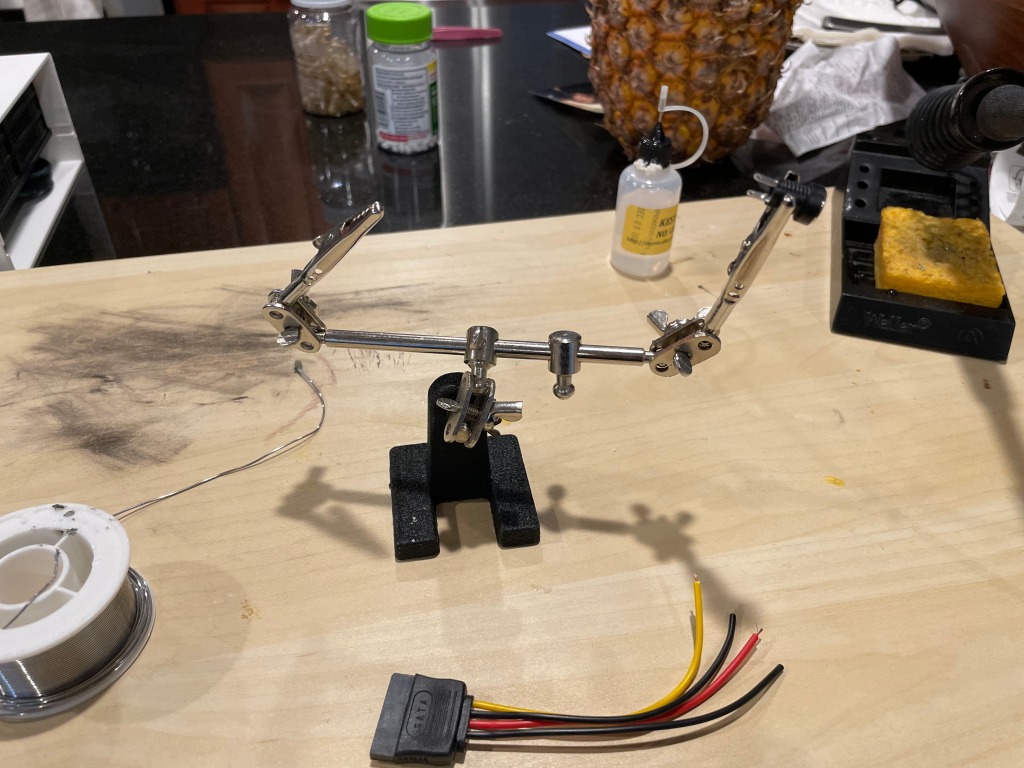

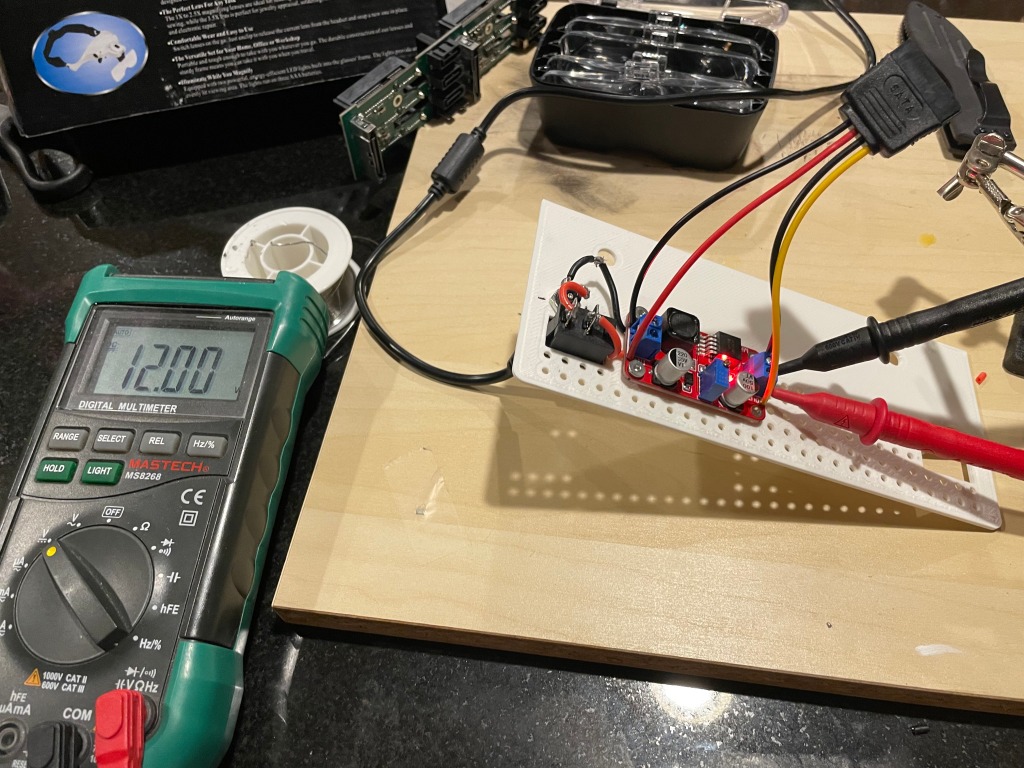

Now I ran into a new problem. The fans for the drive holder ran on the 12 volt line of the SATA cable. The SATA cable only needed 5 volts for the drives but needed 12 volts for the fans. I got a voltage converted and wired it in. I added a switch so the whole unit could be turned off and on.

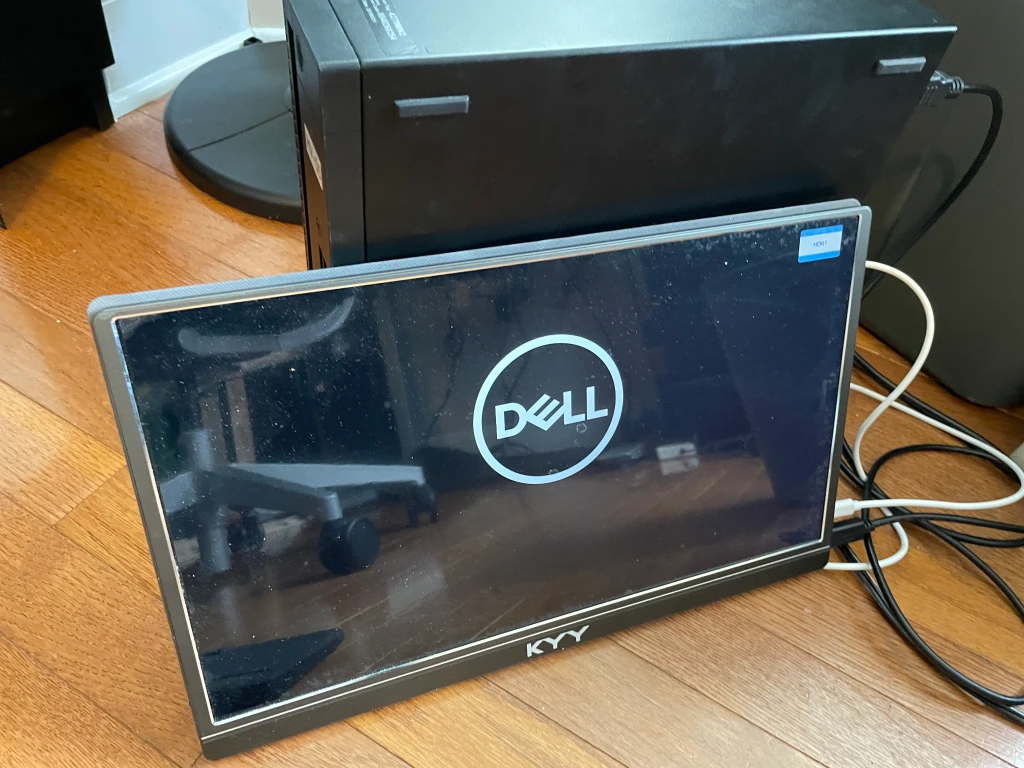

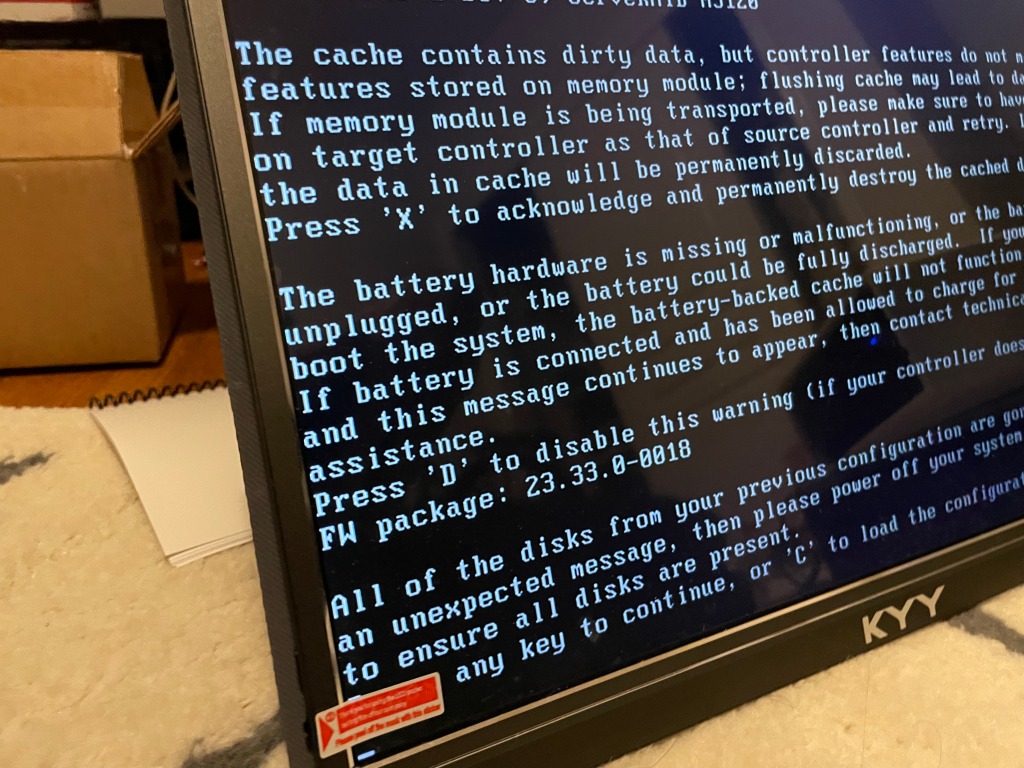

Finally, it is time to add the HBA (not raid controller) to the Dell Optiplex and bring the drives up. This is where everything fell apart. The Optiplexs REALLY didn’t want to start with the HBA controllers. I ordered MANY off ebay to try. Older gen, newer gen, different chipsets… Sometimes they would see SOME of the drives on start-up, sometimes if I bounced the container, then it would see the drives, but there was no consistency. One of the HBAs wouldn’t allow the desktop to boot at all when the card was in. Someone online mentioned, if you put tape over one of the pins on the front of the PCI express connector, the PC won’t be able to read the bus ID it doesn’t understand, and this will allow it to boot. I couldn’t believe when that worked! It still had issues seeing the drives, but interesting none the less.

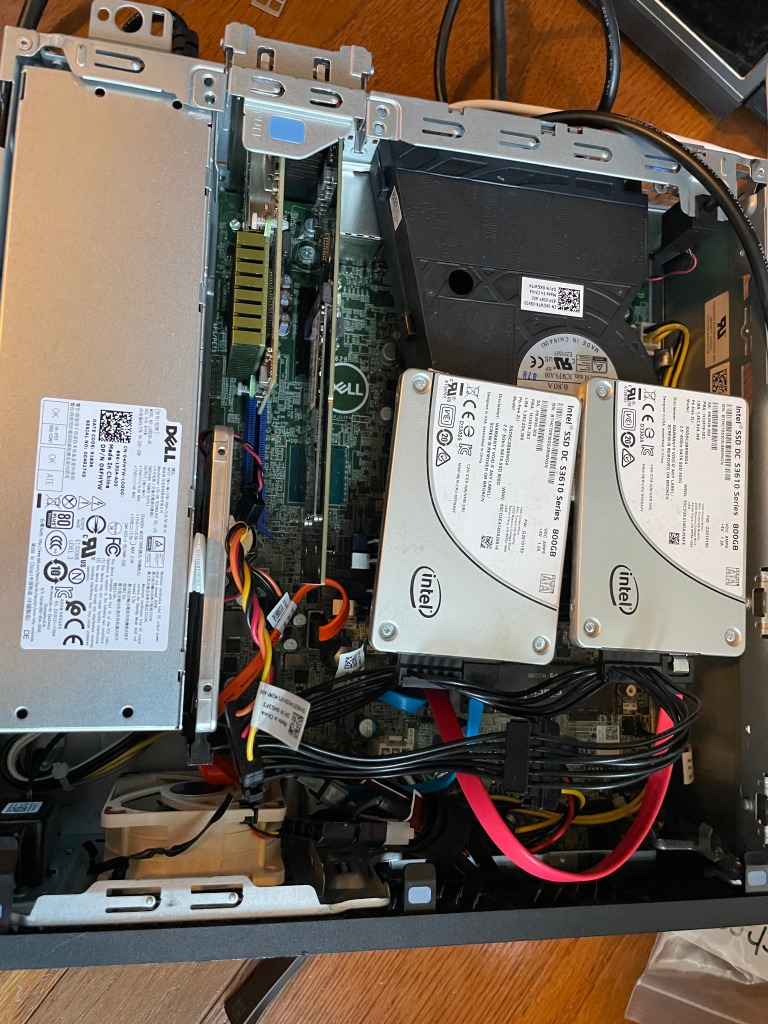

After all of this, I decided it was too much hassle and I wanted something more reliable for the system. I did what I should have done from the start… Used the ports the system already had in the systems… I went from 4 SATA SSDs to 3 SATA SSDS, and 2 NVMe drives. One in the onboard NVMe slot, and another in the PCIe x4 slot that I had. I tried a PCIe card that allows 4 NVMe drives by PCI bifurcation. This is a newer feature which only a few systems support, and these Optiplexs don’t. In either PCIe port. I also want to flag, even though the chipset in these says it supports 128GB of ram, and I can put in 32GB DIMMs and they work fine. The max on the Optiplex 5050 and 5060 is 64GB. I also added a small Noctua fan to the front of the case for additional airflow.

In the end, each of the VMWare nodes has 3 roughly 1TB SSDs, then 2 NVMe drives, one for vSAN cache, one for normal storage. I am booting the nodes off a USB drive in the back, not the most supported config, but has been working well for me. The machines have a dual 10gb nic in the x16 slot, then the secondary NVMe in the x4 slot.